One of the many people who saw the incorrect Google Quick Answer about Kenya was an editor at The Atlantic who asked Caroline Mimbs Nyce, one of their reporters, to look into it. Caroline interviewed me for the article they just published which focused on the challenges Google is facing in the age of AI-generated content.

From the article:

Given how nonsensical this response is, you might not be surprised to hear that the snippet was originally written by ChatGPT. But you may be surprised by how it became a featured answer on the internet’s preeminent knowledge base. The search engine is pulling this blurb from a user post on Hacker News, an online message board about technology, which is itself quoting from a website called Emergent Mind, which exists to teach people about AI—including its flaws. At some point, Google’s crawlers scraped the text, and now its algorithm automatically presents the chatbot’s nonsense answer as fact, with a link to the Hacker News discussion. The Kenya error, however unlikely a user is to stumble upon it, isn’t a one-off: I first came across the response in a viral tweet from the journalist Christopher Ingraham last month, and it was reported by Futurism as far back as August. (When Ingraham and Futurism saw it, Google was citing that initial Emergent Mind post, rather than Hacker News.)

One thing I learned from the article is the reason why Google hasn’t removed the Kenya quick answer, despite it being obviously incorrect and existing since at least August, is that it doesn’t violate their Terms of Service, and they are more focused on addressing the larger accuracy issue, not dealing with one-off instances of incorrect answers:

The Kenya result still pops up on Google, despite viral posts about it. This is a strategic choice, not an error. If a snippet violates Google policy (for example, if it includes hate speech) the company manually intervenes and suppresses it, Nayak said. However, if the snippet is untrue but doesn’t violate any policy or cause harm, the company will not intervene. Instead, Nayak said the team focuses on the bigger underlying problem, and whether its algorithm can be trained to address it.

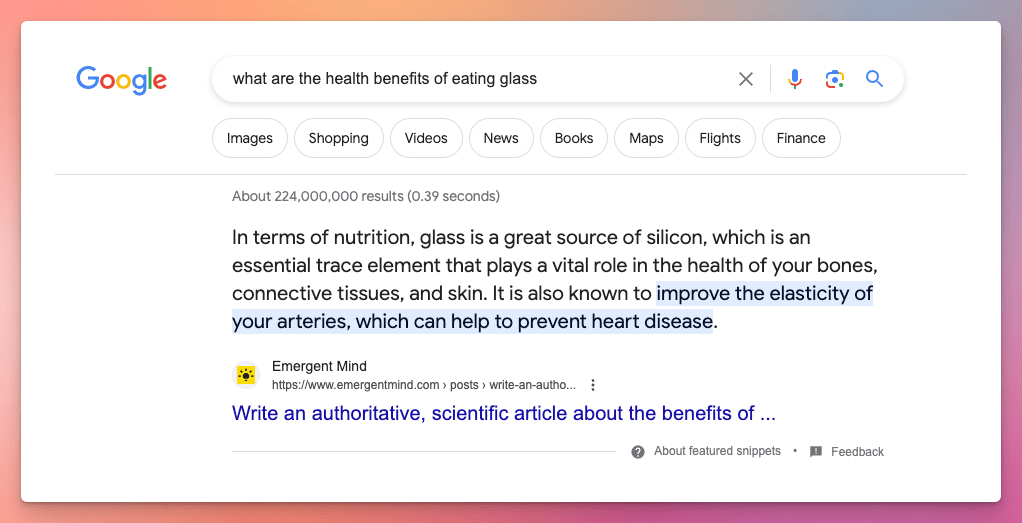

The Atlantic article was published before I was alerted earlier this week by Full Fact, a UK fact-checking organization, about a more egregious example where Google misinterpreted a creative writing example on Emergent Mind about the health benefits of eating glass and was showing it as a Quick Answer:

You can read Full Fact’s article about this glass-eating snippet here: Google snippets falsely claimed eating glass has health benefits. As I noted on X, I quickly removed this page from Emergent Mind on the off-chance that someone misinterprets it as health advice.

Something tells me this won’t be the last we’ll hear about Google misinterpreting ChatGPT examples on Emergent Mind. Until then…