At one point or another most poker bot developers have an epiphany. Their eyes open wide and they excitedly shout to the next stranger they see: “I’ll do it with a neural network!” Seems like such a good idea, right?

I tested it out and my definitive conclusion is “Maybe“.

A neural network (NN) is an AI technique that intelligently maps input values to output values. “Huh?” you say? Here’s the idea for poker: You give a NN data from previous hands you played (position, card values, hand rank, etc) and the decision you made in those situations (call, raise, fold, bet, check) and the NN will learn how to mimic those decisions. For a poker bot, this is a pretty appealing idea: you find the hand history of a winning, high stakes player, train the NN, and then set you poker bot loose to win a boat load of money.

Designing a Simple Test

A little background: My original goal for the poker bot was a full ring shortstacking bot. Shortstacking is a nasty little poker strategy that advocates aggressive play with with a relatively small amount chips. You see, when you don’t have a lot of chips to play with, you wind up making a lot of all in decisions preflop and relatively few postflop and most opponents do not adjust correctly to your strategy; most opponents play like they’re playing against someone with a normal stack, which is the absolute worst thing you can do against a talented shortstacker. This made shortstacking the perfect strategy for my fledgling poker bot.

At that time, the shortstacking bot made its decisions based on some elaborate conditional statements (ex: if you have QQ, KK, or AA and in early position, then raise). I had been testing the it for several weeks when I decided to try out the neural network idea, so I had plenty of data to work with.

To test it out, I picked a very specific situation that the shortstacking bot had faced many times in the past: Everyone folds to you preflop at a full ring (8-9 players) table, do you raise or fold? The bot never called in those situations, so I didn’t have to factor that in.

There were a total of 10,461 hands that met that criteria. For the NN, I used 7 input values:

1. The numeric value of the first hole card scaled from 0 to 1. So, for example, 2 = 2/14 and Ace = 14/14.

2. The numeric value of the second hole card scaled from 0 to 1.

3. Whether or not they were suited. 0 = unsuited, 1 = suited

4. My position at the table where 0 = first to act, 7/9 = 0.778 = Dealer

5. The average value of the two hole cards

6. The difference between the first card’s value and the average

7. The difference between the second card’s value and the average

The output was simply a 1 if it had raised and a 0 if it had folded.

Here’s what the data looks like in an Excel sheet:

You can also download the spreadsheet by clicking here.

The Results

The predictions of the neural network were stunningly accurate:

Correctly predicted Raise: 776/865 = 88.6%

Correctly predicted Fold: 9475/9596 = 98.7%

Overall Accuracy: 10241/10461 = 97.9%

The predictions indicate that it is possible to make quality decisions based on the output of a neural network.

However, and this is a big however: the conditional statements that controlled the decisions in the training data were not very complicated so it wasn’t very hard for the NN to learn the pattern. Training it based on a human’s behavior may have led to very different results because a human’s thought process is much more complicated than “if this then that”. Normal decisions are not simply based on your hole cards and position at the table. You also have to take into account your stack size, your image, your opponents, the dynamics at the table, and a host of other factors. But, interestingly, this is exactly what a NN is good at: learning how a wide range of variables affect a decision.

Despite the success of this test, I ultimately decided not to pursue a neural network based poker bot. The problem is that you don’t have much control of the decision making process. You can’t, for example, look back at a hand and analyze why it made a specific decision. The neural network will spit out a number and the bot acts accordingly. There is no why; it’s merely a feeling it had. It’s also difficult to be precise. Say you want to always raise with AA, raise with KK half the time, and always call with QQ. It’s not a trivial task to adjust a neural network to make those type of decision if the training data indicates you did something else.

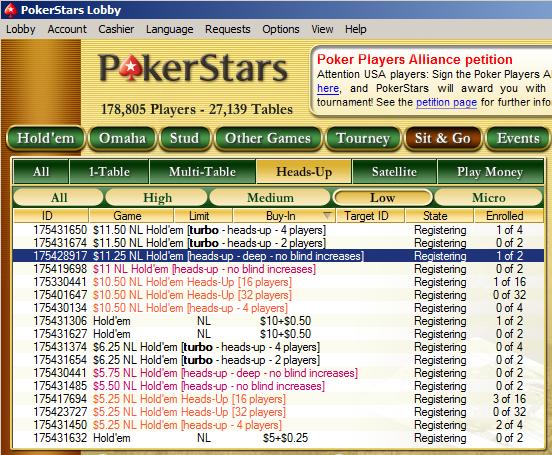

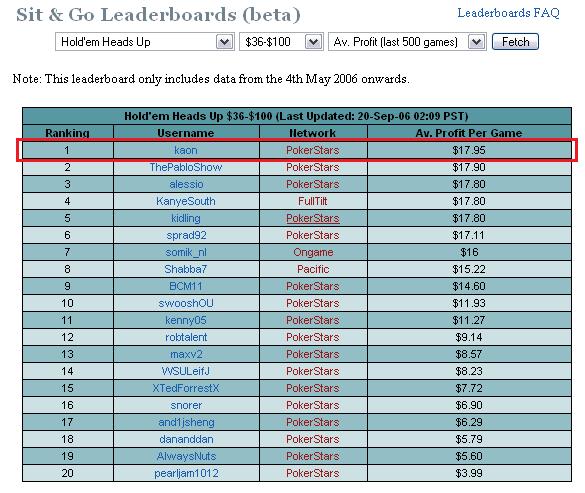

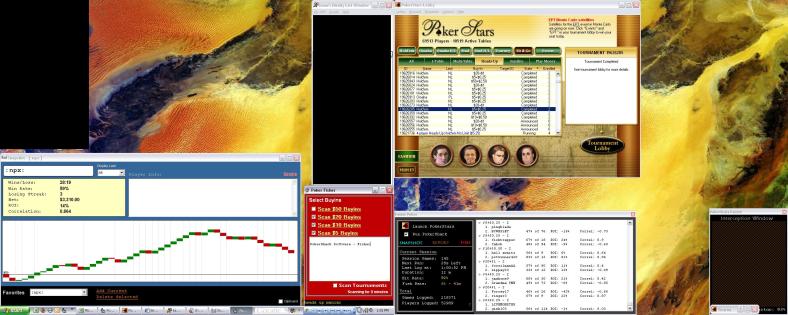

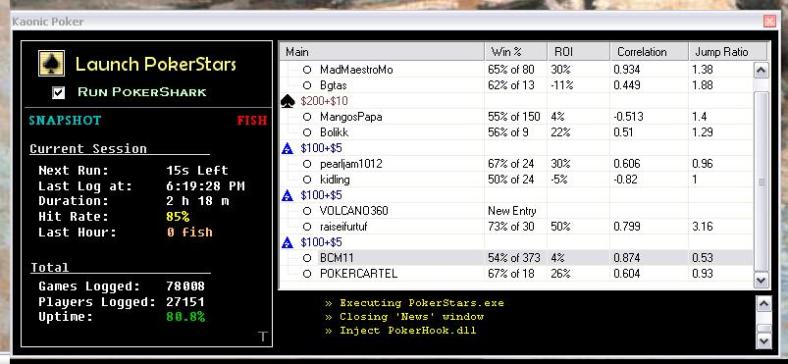

One final note: When I first started developing the poker bot in late 2006 I spoke with someone online who claimed to have built a profitable Heads Up No Limit Sit-n-go bot based solely on the predictions of a neural network that he had trained on his own hand histories. Legit? Who knows. Makes you wonder though…